Search engines have become an indispensable part of our modern digital lives. With over 5.19 billion internet users worldwide (As per Statista) relying on platforms like Google, Bing, and Baidu to navigate the web, understanding how these search tools work is more important than ever.

How do search engines manage to instantaneously comb through hundreds of billions of webpages and generate relevant results for our myriad queries? The secret lies in the ingenious, ever-evolving algorithms powering them.

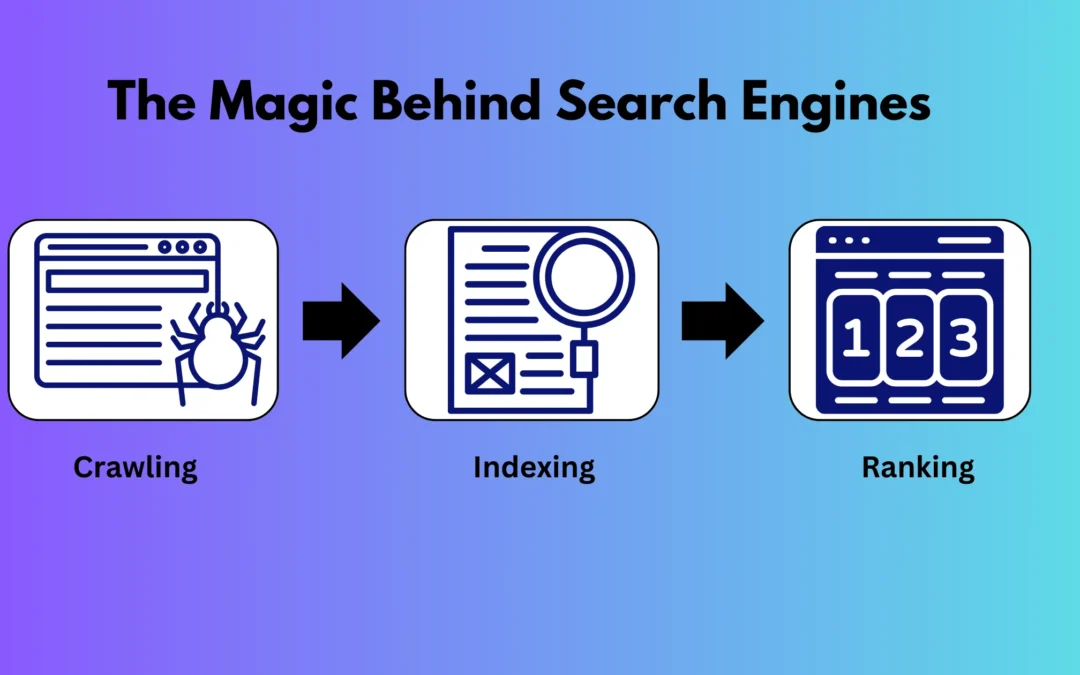

Stay with me on an illuminating journey as I unravel the 3 core secrets behind the magical algorithms at the heart of our favorite search engines. Gaining insight into the mathematical models and processes running quietly under the hood can empower you to optimize your website for greater visibility.

- Search Engines Secret #1: The Tireless Crawling of Billions of Webpages

- Secret #2: Organizing the Internet Through Billions of Lines of Index Code or Indexing

- Secret #3: Ranking Algorithms - The Brains Behind Search Relevancy

- The Future of Smarter, Faster Search

- Key Takeaways: How Search Algorithms Work Their Magic

- FAQs

Search Engines Secret #1: The Tireless Crawling of Billions of Webpages

The foundational work of any search engine starts with the crawling process. Crawling is the process by which search engines systematically browse and index the web. Crawling involves using automated scripts or bots to explore the internet in a systematic, organized way. These software programs, also called spiders or crawlers, start with a list of seed URLs or pages assigned to them by the search engine.

The crawlers then visit these pages and extract all the hyperlinks. The crawlers recursively follow and analyze these links, exploring new pages and gathering data. Crawling refers to how automated scripts or bots systematically browse through the massive expanse of the web to collect the raw data needed for indexing and ranking.

Google today relies on an army of server-side bots to carry out its crawling needs. These meticulous bots continuously crawl billions of web pages per day! Without such comprehensive sweeping capabilities, search engines would be helpless – their algorithms would have no way to access and analyze enough information to generate complete and relevant results.

During each crawling session, bots start from assigned pages known as seeds. They analyze these pages and extract all embedded links, recursing through each one and indexing everything along the path. Billions of hyperlink pathways across the web are mapped out through exhaustive crawling.

Some critical aspects of pages cataloged by crawlers include:

- Page Content – All visible text-based content is extracted, including body text, titles, headings, image alt text, etc.

- Metadata – This data not visible on the page itself is also crawled, including keywords, descriptions, author info, dates, etc.

- Media – Crawlers catalog and index all media content like embedded images, videos, documents, etc.

- Links – The intricate link structures within and between pages are analyzed to model website architecture.

Without comprehensive crawling capabilities, search algorithms would lack the raw material to work their magic. It empowers them to build their massive structured databases ready for the subsequent critical phases – indexing and ranking.

Secret #2: Organizing the Internet Through Billions of Lines of Index Code or Indexing

Once crawlers gather data, it must be organized and structured for fast, accurate searches – this is where indexing comes in. Indexing is storing, organizing, and structuring the data crawlers collect to facilitate quick and precise searching. After the crawlers have explored the web and gathered intelligence, the content and metadata about webpages need to be organized to retrieve information quickly.

This is accomplished by creating a searchable index database of all the content crawled from the web. Webpage data is processed and converted into readable code that can be searched and analyzed. Each webpage is assigned a unique document ID and associated attributes like title, body text, headings, links, media files, tags, dates, etc.

This index acts like a library catalog but for the internet. It carefully records and cross-references relationships between the billions of web pages and data points crawled by search engines. Sophisticated data structures like inverted indexes, graph databases, and hash tables allow search algorithms to scan the index and pull relevant matches in milliseconds.

Without comprehensively indexing the web in this manner, search engines would be unable to retrieve and connect information relevant to user queries quickly. Indexing is crucial for ensuring speed and comprehensiveness when searching the massive internet scale.

Enhancing indexing algorithms also powers innovations like natural language processing, semantic search, and contextual ranking. Overall, indexing gives the stored content meaning and structure so that search engines can rapidly analyze it to find and serve the most useful results for searchers.

The raw content dug up by crawlers gets converted to billions of lines of code for storage in a search index database. Each webpage has a unique document identifier along with associated attributes and text. Connections between web documents are also logged to power-linked search results.

Some key data points indexed for each page typically include:

- Page titles and meta descriptions

- Body text, headings, image alt text

- Internal links pointing to other pages on the site

- External links pointing to other sites

- Media files like images, videos, docs

- Authorship and publishing dates

- And hundreds more granular details!

When you run a search query, the engine scans its massive index within milliseconds using clever data structures like inverted indexes. It pulls the most relevant matches and serves up results according to complex ranking calculations.

Without comprehensively indexing the web, search algorithms would struggle to quickly retrieve and analyze enough information to generate complete, relevant results for our queries.

Pro Tip: Pay close attention to optimizing page titles, meta descriptions, alt text, and body content with keywords during SEO. These directly impact indexing and rankings!

Secret #3: Ranking Algorithms – The Brains Behind Search Relevancy

Ranking refers to the process search engines use to determine the order to display results on a search engine results page (SERP). After crawlers have indexed webpages, algorithms must analyze the collected data and decide which pages are most relevant to a user’s query to rank them accordingly.

Ranking calculations use complex mathematical formulas and models to assign a score to each webpage. Hundreds of different factors may be considered when calculating these scores. Some of the key ranking signals include:

Relevance to the search keywords/intent

A core ranking signal is how relevant a page’s content is to the searcher’s query and underlying intent. Pages optimized with keywords semantically related to the search terms tend to receive higher ranks.

Authority metrics like PageRank or Domain Authority

Search engines analyze metrics like Google’s PageRank and Moz’s Domain Authority to evaluate a site’s reputation and authority. Pages on more trusted domains score better.

Quality and quantity of inbound links

Algorithms assess the number and quality of external links pointing to a page from other sites. More links, especially from authoritative sources, signify greater importance.

On-page optimization and keywords usage

Natural, seamless usage of keywords in page titles, headings, metadata, alt text, and body content boosts relevance. However, over-optimization penalizes pages.

Usability signals like click-through rates and dwell times

User engagement metrics help search engines gauge real-world usefulness. Higher CTRs and dwell times indicate pages satisfy searcher intent.

Site speed and performance

Fast-loading optimized webpages enhances user experience, so search rankings factor in site speed as a signal. Faster sites rank better.

User experience and engagement

Beyond speed, overall site UI, navigation, mobile-friendliness, security, and ability to engage visitors impact rankings as good UX keeps searchers happy. Analyzing these ranking signals in combination allows search algorithms to effectively discern and promote the most valuable pages for search queries while maintaining a quality search experience.

On-Page Content

High-quality content optimized with keywords ranks well due to its relevance for searcher intent. Long-form, useful, engaging content over thin, duplicated, or low-value content is favored.

User Engagement

How people interact with a page also feeds into ranking calculations. Metrics like click-through rates, bounce rates, and session times help determine how engaging the content is for visitors. Countless other ranking factors are considered by algorithms, like trust indicators, site speed, structured data, etc. Google alone boasts of using over 200 signals! The exact formula is a closely guarded secret.

By analyzing hundreds of signals in combination, search engines aim to satisfy user intent with the most relevant results. Gaming the system through shady tactics rarely gives sustainable results. For long-term success, focus on creating high-quality content that answers searcher needs.

The Future of Smarter, Faster Search

The brilliant minds at companies like Google are continually developing search algorithms using cutting-edge technology. Their work is never done as the web continues exponentially evolving.

AI and machine learning advances are enabling more intuitive, semantic search capabilities. Instead of matching keywords, algorithms can better understand the underlying intent behind search queries, even if not directly expressed.

Quantum computing could unlock unimaginably fast processing speeds to analyze massive datasets for generating hyper-relevant results. Google is already prototyping quantum machine learning models primed to take search to revolutionary new levels. While the nitty-gritty details of search algorithms are hidden, they continue rapidly advancing to enhance our journey through the digital universe.

Here are some ways web searches are likely to evolve in the future:

- More conversational interfaces – Search will become more informal and interactive using natural language processing. Instead of just typing keywords, users will engage in dialogue with search engines.

- Greater personalization – Search results will be highly customized based on individual user profiles, search history, location, preferences, and context. This provides more relevant results.

- Enhanced voice search – Voice assistants like Siri and Alexa will improve, allowing people to search hands-free using verbal queries. Voice search is more natural and convenient.

- Expanded semantic search – Search engines will go beyond keywords to better understand the meaning and intent behind queries, even if not directly stated. This enables a more intuitive experience.

- Visual search – Image recognition and computer vision will enable searching with images instead of just text. Snap a photo on your phone; a visual search will identify objects/places.

- Augmented reality – AR can overlay helpful information about people, places, and things right in your field of vision during the search. This blends the digital and physical.

- Faster processing – Quantum computing will instantly provide immense power for searching and analyzing massive datasets.

- Increased automation – AI and bots can conduct more searches and tasks automatically based on user needs and preferences.

- Continued mobile optimization – Search on smartphones and devices will become even more streamlined as mobile overtakes desktop.

While the specifics are uncertain, the search will evolve dramatically to be more convenient, intuitive, visual, and assistive. But the core goal will remain – connecting people with the most relevant information at lightning speed.

Key Takeaways: How Search Algorithms Work Their Magic

Let’s recap the essential secrets underlying the mathematical magic of search engines:

- Tireless crawlers index billions of web pages to collect data

- Comprehensive indexing catalogs and structures content for retrieval

- Ranking algorithms weigh multiple signals to calculate relevance

- Authority, backlinks, content, and UX metrics are top-ranking factors

- AI and quantum computing power ongoing search innovations

Internalizing these core principles equips you to optimize web properties for higher visibility through ethical SEO best practices. Remember that search is designed for consumers, not creators. Focus on providing content that solves your audience’s needs to earn trust and awareness.

Search pioneers like Google are on an endless quest to enhance the web experience. While search algorithms contain hidden complexities, their overarching purpose remains beautifully simple – to connect people with meaningful information.

Ready to unlock greater visibility and web traffic through search engine optimization? The digital marketing experts at MAM Digital Pro can help you master ethical SEO and make your brand stand out. Request a free website audit and competitive SEO analysis to kickstart your success today.

FAQs

- How does Google search work?

Google’s search engine relies on web crawlers continuously exploring the internet and collecting information about webpages. This data is then indexed and ranked using Google’s patented PageRank algorithm and other factors like relevance, popularity, and authority. When you search, Google checks its index for matching webpages, assigns them rank scores, and returns the results it deems most relevant to the user.

- What is a search engine, and how does it work?

A search engine is a web-based tool that enables users to search the internet for information. It uses automated crawlers to browse billions of web pages, indexing their content and metadata. When a user enters a query, algorithms analyze the index to find matching web pages, rank them, and display the most relevant results to the user. The significant components are crawling, indexing, and ranking.

- How does ranking work in search engines?

Search engine ranking refers to how algorithms determine the order of results on SERPs. The ranking considers relevance, authority, inbound links, user experience signals, and more. Each page receives a rank score calculated from these factors. Results with the highest rank scores appear first while low-scoring pages appear later. The exact formulas are trade secrets.

- What are the parts of a search engine?

The critical components of a search engine are the crawler, indexer, algorithm, and user interface. The crawler locates and gathers data from web pages. The indexer stores and organizes this data for quick retrieval. Algorithms analyze indexed data to rank pages. The user interface allows people to enter and view queries and search results.

- How are search engines helpful to us?

Search engines are instrumental online research and discovery tools. They allow people to quickly look up information on any topic in seconds versus manually browsing the vast internet. SEO also helps connect users with businesses offering products/services they need. Fast access to information improves productivity.

- How do search engines generate revenue?

Most search engine revenue comes from selling online advertising. Text ads alongside organic results are auction-based pay-per-click, meaning advertisers pay only when ads are clicked. Display advertising and affiliate programs also generate revenues. Data analytics is another revenue stream for search companies.

- How do search engines determine what pages show up on SERPs?

Search engine algorithms determine page rankings on SERPs using hundreds of factors. Most important is relevance to the search query, authority metrics like inbound links, keyword optimization, quality/uniqueness of content, trustworthiness, positive user experience signals, and more. Calculating scores for these factors produces the page rank.

- Can you pay to be higher ranked on search engines?

Yes, you can pay for higher visibility on search engines through pay-per-click advertising programs like Google Ads. Advertisers bid on keywords, displaying their ads above organic results when searching related terms. More budget means more prominent ad placement. However, organic rankings depend on algorithms.

- How do search engines impact web traffic?

Search engines drive massive web traffic as they are the primary online discovery tool. Ranking on the first page for relevant keywords can send thousands of visitors to a site. Appearing higher in organic results generally increases click-throughs and traffic. SEO optimization is done to improve rankings and visibility.

- Why do search engines keep changing their algorithms?

Search engines continually evolve their ranking algorithms to provide the most relevant results to users. Factors like new types of content, changing user behavior, and growth of indexing scale mean algorithms must adapt. Also, changing algorithms minimizes manipulation by making SEO a moving target. Better relevance enhances the search experience.