Technical SEO refers to ensuring that a website meets the technical requirements of modern search engines. The goal is to improve a website’s organic rankings. Technical SEO focuses on optimizing the behind-the-scenes, under-the-hood technical aspects of a website that search engine crawlers use to understand and rank pages.

- Critical Elements of Technical SEO

- Myth 1: Meta Keywords Drive Ranking Relevance

- Myth 2: More Outbound Links Universally Boost Rankings

- Myth 3: XML Sitemaps Guarantee Full Indexation

- Myth 4: JavaScript Rendered Content Isn't Indexable

- Myth 5: Server Location Matters for Local Rankings

- Myth 6: Faster Servers Automatically Improve Rankings

- Myth 7: Low Traffic Sites Can't Rank

- Myth 8: PDFs Are Invisible To Search Engines

- Myth 9: More SEO Plugins Equals Better Results

- Myth 10: Security Certificates Don't Influence SEO

- Conclusion

Critical Elements of Technical SEO

Critical elements include the following.

- Site Speed – Optimizing images, code, hosting infrastructure, and overall site performance to achieve fast page load times. Site speed is a known ranking factor and significantly impacts user experience.

- Code Optimization – Cleaning up sloppy website code, selecting efficient site frameworks, implementing best practice markup techniques, and leveraging performance testing tools to Diagnose technical bottlenecks. Good code quality aids rendering.

- Server Settings – Configuring hosting servers and environments for search-friendly functions like proper URL redirects, efficient crawling capacities, and optimal indexation permissions. Stable servers are critical for technical SEO success.

- Site Architecture – Structuring a website’s content through clean internal URL paths, easy-to-navigate information hierarchies, XML sitemaps, and well-organized, Crawler-accessible internal linking. This helps search bots understand pages.

By comprehensively combining strong technical foundations with robust content development and digital marketing strategies, websites can maximize organic rankings, visibility, and conversions. Technical SEO creates polished, fast, and satisfying user experiences, leading to higher rankings and lower bounce rates.

Technical SEO is filled with misconceptions that send websites down the wrong optimization paths, wasting valuable time and resources while hurting search visibility. In this myth-busting guide, we’re tackling the top 12 technical SEO fallacies that need to finally be put to rest so you can boost your organic growth.

Myth 1: Meta Keywords Drive Ranking Relevance

The statement “Meta Keywords Drive Ranking Relevance” is a widely debunked myth in the SEO world. While meta keywords were once considered a ranking factor, Google and other search engines have officially confirmed that they are no longer used directly for ranking purposes.

Here’s why the myth persists:

- Legacy information: Older SEO resources and outdated information may still promote meta keywords as a ranking factor, perpetuating the myth.

- Misinterpretation of correlation: Some may mistakenly interpret a correlation between high-ranking websites and optimized meta keywords, assuming a direct cause-and-effect relationship.

However, experts agree on the following points:

- Google doesn’t use meta keywords for ranking: Google has publicly stated that meta keywords have not been used as a ranking factor since 2009 (Search Engine Journal).

- Focus on content relevance: Google prioritizes content that naturally addresses user search intent, not keywords stuffed into meta tags.

- Meta keywords can still be valuable: While not directly impacting ranking, meta keywords can help search engines understand your content and potentially improve click-through rates (CTRs) from search results.

Expert Tips:

- Don’t keyword stuff: Focus on creating high-quality content that naturally includes relevant keywords.

- Use descriptive meta keywords: Include relevant and concise keywords in your meta tags, but don’t rely on them for ranking.

- Optimize for user intent: Understand your target audience’s search intent and create content that directly addresses their needs.

- Focus on on-page SEO: Optimize your website’s title tags, headings, and internal linking for relevance and user experience.

- Build high-quality backlinks: Earn backlinks from authoritative websites to improve your website’s trust and authority.

Remember, effective SEO goes beyond keyword manipulation. By creating valuable content, optimizing for user experience, and building genuine authority, you can achieve substantial search engine visibility without relying on outdated myths like the importance of meta keywords for ranking.

Myth 2: More Outbound Links Universally Boost Rankings

The statement “More outbound links universally boost rankings” is a common SEO misconception, and experts generally agree it’s not entirely accurate. While outbound links can be valuable for SEO, adding more indiscriminately can have negative consequences.

Out Bound Links (Technical SEO)

Here’s why “more links = better rankings” is misleading:

- Link quality matters more than quantity: Google prioritizes the quality and relevance of backlinks over sheer quantity. High-quality backlinks from relevant and authoritative websites are far more valuable than many low-quality links.

- Excessive linking can be penalized: Google may penalize websites with unnatural linking patterns, including those with excessive outbound links. This can hurt your overall ranking potential.

- Relevance and context are crucial: The context and relevance of your outbound links matter. Linking to relevant and authoritative websites within your content shows Google you’re providing valuable information to your users, indirectly helping rankings.

However, outbound links can still be beneficial when used strategically:

- Links can improve website authority: Backlinks from high-quality websites can signal to Google that your website is valuable and trustworthy, improving overall authority and ranking potential.

- Links can drive referral traffic: Outbound links to relevant websites can drive traffic to your site from their audience, increasing your visibility and user engagement.

- Links can provide context and value: Linking to relevant sources within your content can provide context and value for users, improving user experience and potentially influencing ranking signals.

Expert Tips:

- Focus on quality over quantity: Prioritize building backlinks from high-quality and relevant websites.

- Link naturally and contextually: Integrate outbound links seamlessly within your content where they provide value for users.

- Avoid unnatural linking patterns: Don’t artificially inflate your link profile with excessive or irrelevant links.

- Monitor your backlinks: Track your backlinks regularly and remove any low-quality or spammy links.

- Focus on building relationships: Building genuine relationships with other websites can lead to organic and high-quality backlinks over time.

Remember, outbound linking should be a strategic element of your SEO strategy, not just a numbers game. Focus on building relevant and high-quality backlinks that provide value to your users and contribute to your website’s overall authority and credibility.

By following these tips and avoiding the “more links is better” mentality, you can leverage outbound links effectively to improve your SEO performance without risking penalties or compromising user experience.

Myth 3: XML Sitemaps Guarantee Full Indexation

The statement “XML sitemaps guarantee full indexation” is a misconception. While sitemaps can benefit SEO, they’re not a magic bullet for complete indexing.

XML Sitemaps (Technical SEO)

Here’s why:

Benefits of XML Sitemaps:

- Enhanced Crawlability: As per MOZ, Think of sitemaps as a map for search engine bots. By listing essential pages in a structured format, you guide them efficiently through your website, making it easier to discover and index valuable content.

- Improved indexing potential: While not guaranteed, sitemaps can help search engines prioritize your important pages, potentially leading to faster indexing, as described by Yoast.

- Identification of indexing issues: According to the Search Engine Journal, some sitemap tools can highlight potential roadblocks to indexing, such as blocked URLs or crawl errors. Fixing these issues can smooth the path for search engine bots (: ).

Limitations of XML Sitemaps:

- No Indexing Guarantee: Simply submitting a sitemap doesn’t automatically grant your pages a place in the search engine index. Relevance, content quality, and technical limitations can still influence indexing decisions.

- Not a substitute for SEO best practices: Sitemaps are just one piece of the SEO puzzle. On-page solid optimization, including relevant keywords, high-quality content, and internal linking, remains crucial for ensuring search engines understand and value your website, as described by Backlinko.

- Potential for being ignored: According to Semrush, In some cases, search engines may choose to bypass pages listed in your sitemap, even if they’re technically accessible. This underscores the importance of focusing on overall website quality.

Expert Opinion:

- Use sitemaps strategically: Leverage sitemaps as a valuable tool to improve crawlability and provide search engines with essential information about your website. However, please don’t rely on them to guarantee to index.

- Focus on overall SEO: According to Ahrefs, Implement on-page solid optimization, build high-quality backlinks, and create engaging content to strengthen your website’s overall ranking potential.

- Monitor your indexing status: Regularly track your website’s indexing status using tools like Google Search Console to identify pages that haven’t been indexed and address any underlying issues.

Remember, XML sitemaps are a powerful tool in your SEO toolbox, but they’re not a silver bullet. Using them effectively alongside other SEO best practices can significantly improve your website’s discoverability and climb the search engine ladder.

Bonus Tip: Consider submitting your sitemap to major search engines like Google Search Console and Bing Webmaster Tools to ensure they know its existence and can access its valuable information.

By incorporating these insights and staying informed about the latest SEO trends, you can ensure your website thrives in the ever-evolving search engine landscape.

Myth 4: JavaScript Rendered Content Isn’t Indexable

The statement “JavaScript rendered content isn’t indexable” is partially accurate but outdated. While it’s true that search engines historically had difficulty understanding and indexing content dynamically generated by JavaScript, significant advancements have been made in recent years.

Here’s a nuanced breakdown:

Historically:

- Limited JavaScript parsing: Search engine crawlers primarily relied on static HTML code, often missing JavaScript-generated or manipulated content. This meant valuable information within dynamic web applications could be invisible to search engines.

- SEO challenges: Websites relying heavily on JavaScript for content display faced difficulty ranking well due to this limited indexing.

Present-day:

- Improved JavaScript understanding: Search engines have significantly improved their ability to parse and understand JavaScript code. This allows them to access and index content dynamically generated by JavaScript frameworks like React and Angular.

- Focus on user experience: Search engines prioritize websites that provide a good user experience. Since JavaScript is often used to create interactive and engaging experiences, search engines actively adapt to crawl and index it effectively.

However, challenges remain:

- Complexity still matters: Search engines may struggle with complex JavaScript code or websites that rely heavily on AJAX or single-page applications (SPAs). This can lead to incomplete indexing and missed ranking opportunities.

- Best practices are crucial: Following best practices like server-side rendering (SSR) and structured data markup can help search engines understand your JavaScript-rendered content more easily.

Expert Opinion:

- Don’t fear JavaScript, but optimize it: While JavaScript isn’t inherently invisible anymore, optimizing it for search engine crawlers is still essential for optimal visibility.

- Focus on user experience: Prioritize creating a user-friendly website with engaging content, regardless of whether JavaScript or static HTML renders it.

- Implement SEO best practices: Utilize server-side rendering, structured data markup, and other SEO techniques to make your JavaScript content easily understandable for search engines.

- Test and monitor: Regularly test how search engines crawl and index your JavaScript content using tools like Google Search Console. This helps identify any indexing issues and allows for timely optimization.

Remember, search engines constantly evolve, and their ability to understand JavaScript steadily improves. By focusing on user experience and implementing best practices, you can ensure your JavaScript-rendered content is visible to search engines and contributes positively to your website’s SEO performance.

Additional tips:

- Use a CDN: A Content Delivery Network (CDN) can cache your website’s static content closer to users, improving website speed and potentially helping search engines crawl your content more efficiently.

- Provide alternative content: Consider offering text summaries or static versions of your JavaScript-rendered content for improved accessibility and search engine visibility.

- Stay informed: Keep yourself updated on the latest SEO trends and advancements in search engine capabilities related to JavaScript.

By following these expert tips and staying informed, you can harness the power of JavaScript while ensuring your website remains discoverable by search engines and your target audience.

Myth 5: Server Location Matters for Local Rankings

The location of a website’s server can impact local rankings. This is because Google prefers to show results based on the user’s location. Google doesn’t care which host is used, but it does care about factors like site speed, reliability, server uptime, and security. Server location also influences other aspects of hosting, such as latency, security, cost, and legal compliance. All of these are affected by the choice of host.

Server Locations (Technical SEO)

While Google hasn’t explicitly confirmed server location as a direct ranking factor, experts generally agree it can indirectly impact local rankings through several crucial factors:

1. Website Speed: A server closer to your target audience physically translates to faster loading times. This is critical for local SEO, as Google prioritizes fast-loading websites in search results. Users are likelier to bounce from a slow site, harming user engagement and ultimately hurting rankings.

2. User Experience: Slower loading times due to a distant server can frustrate users and negatively impact their overall experience with your website. This can influence user reviews and behavior signals, which Google considers in ranking algorithms.

3. IP Address and Geolocation: While not a direct ranking factor, the IP address of your server can influence how Google geographically associates your website. This can be beneficial if your server is in the same region as your target audience, potentially leading to higher visibility in local search results.

4. Content Relevance: Some experts suggest that a server within your target audience’s region might provide access to more local data and information, allowing you to tailor your content and targeting accordingly. This can potentially improve content relevance and local search performance.

However, it’s essential to consider these nuances:

- Content and On-Page SEO: Strong local content, optimized for local keywords and user intent, still plays a crucial role in local rankings, regardless of server location.

- CDN Integration: Content Delivery Networks (CDNs) can cache your website content on servers closer to users, regardless of your physical server location, significantly improving website speed.

- Domain Extension: A country-specific domain extension (ccTLD) like .co.uk can provide some local ranking benefits, but it’s not a substitute for local solid SEO practices.

While server location isn’t a guaranteed ranking boost, it can indirectly impact local rankings through its influence on website speed, user experience, and potential for local content optimization. Choosing a server closer to your target audience can be a valuable strategy alongside other local SEO best practices.

Expert Tips:

- Prioritize website speed and optimize for local search terms.

- Consider using a CDN for a better user experience.

- Choose a server location within your target audience’s region if feasible.

- Implement strong local SEO practices like NAP consistency and local citations.

- Monitor your website performance and local ranking signals regularly.

Remember, local SEO is a holistic effort. Optimizing for server location can be a valuable piece of the puzzle, but it’s not a magic bullet. Combining server location considerations with solid on-page and off-page SEO can significantly improve your local search visibility and attract more local customers.

Myth 6: Faster Servers Automatically Improve Rankings

The statement “Faster servers automatically improve rankings” is a nuanced topic, and experts generally agree it’s not entirely accurate. While server speed can indirectly influence rankings in some ways, it’s not a direct ranking factor and shouldn’t be viewed as a magic bullet for SEO success.

Here’s why relying solely on faster servers for ranking improvement might be misleading:

- Google prioritizes relevance and quality over speed: While website speed is a crucial factor for user experience, Google’s algorithms prioritize content that’s relevant, high-quality, and meets users’ search intent. A fast website with irrelevant content won’t outperform a slower website with valuable information.

- Speed is a threshold, not a guarantee: Faster loading times can improve user engagement and potentially reduce bounce rates, considered in ranking algorithms. However, simply having a fast server doesn’t guarantee high rankings. Other factors like content, backlinks, and on-page optimization play a much more significant role.

- Cost-benefit analysis: Upgrading to a significantly faster server can be expensive, and the ROI might not justify the cost if it doesn’t substantially increase organic traffic or conversions.

However, faster servers can indirectly benefit your SEO in several ways:

- Improved user experience: Faster loading times lead to better user experience, potentially reducing bounce rates and increasing user engagement. Google considers these metrics when ranking websites.

- Mobile-friendliness: Faster servers can improve website performance on mobile devices, which is crucial for SEO as Google prioritizes mobile-friendly websites.

- SEO best practices: Optimizing server speed is often part of a broader SEO strategy that includes other best practices like content optimization and link building. This holistic approach can lead to overall ranking improvements.

Expert Tips:

- Focus on a balanced approach: Prioritize creating high-quality content, building backlinks, and optimizing on-page SEO elements. Consider server speed as a complementary factor to these core strategies.

- Measure and analyze: Use analytics tools to track your website’s performance metrics like loading times and bounce rates. This data can help you determine if server speed is a bottleneck and if upgrading would be beneficial.

- Optimize for mobile: Ensure your website performs well on mobile devices, as Google prioritizes mobile-friendliness in search results.

- Choose a reliable hosting provider: Invest in a reliable hosting provider with a good uptime record to ensure consistent website performance.

- Monitor and improve: Monitor your website’s performance and make necessary improvements, including server optimization.

Remember, SEO success requires a comprehensive approach focusing on user experience, quality content, and relevant optimization strategies. While a fast server can contribute, it’s not a guaranteed path to higher rankings. You can achieve sustainable organic traffic growth by prioritizing the core elements of good SEO and making data-driven decisions about server upgrades.

Myth 7: Low Traffic Sites Can’t Rank

The statement “Low Traffic Sites Can’t Rank” is a prevalent myth in the SEO world, but experts essentially debunk it as an oversimplification. While higher traffic can positively influence SEO, low-traffic sites can still rank well under the right conditions.

Here’s why the myth is misleading:

- Google prioritizes relevance and quality over traffic: While traffic can signal user engagement and website authority, it’s not the sole ranking factor. Google’s algorithms prioritize websites that offer the most relevant and high-quality content for specific search queries, regardless of current traffic levels.

- Many low-traffic websites rank for niche keywords: Niche keywords, with lower search volume but focused intent, can be perfect targets for low-traffic sites. By optimizing for these specific keywords and providing exceptional content, such websites can achieve high rankings and attract relevant traffic.

- New websites can start with low traffic and climb gradually: It’s unrealistic to expect high traffic instantly for any new website. Consistent effort in content creation, on-page SEO, and link building can help new and low-traffic websites gradually climb the rankings.

However, it’s essential to consider these nuances:

- Low traffic can indicate underlying issues: While not a direct ranking factor, low traffic can point to underlying issues like poor user experience, technical problems, or inadequate SEO efforts. Addressing these issues is crucial for long-term success.

- Competition plays a role: Ranking for highly competitive keywords can be challenging for low-traffic sites. Targeting less competitive niches or long-tail keywords can be more effective initially.

- Building authority takes time: Establishing website authority, which factors like backlinks and brand recognition can influence, takes time and consistent effort. Building authority can indirectly improve ranking potential even for low-traffic sites.

Expert Tips:

- Focus on high-quality content and relevant keywords.

- Optimize your website for on-page SEO factors.

- Build backlinks gradually from reputable sources.

- Promote your website through social media and other channels.

- Track your progress and analyze website data regularly.

- Be patient and consistent with your SEO efforts.

Remember, the key to SEO success lies in providing value to your target audience through high-quality content and user-friendly experiences. While low traffic may present initial challenges, it doesn’t preclude ranking success. Low-traffic sites can climb the rankings and attract relevant visitors by adopting the right strategies and focusing on quality.

Myth 8: PDFs Are Invisible To Search Engines

The statement “PDFs are invisible to search engines” is a misconception. While it’s true that PDFs can present some challenges for search engine crawling and indexing, they are not entirely invisible.

Here’s why:

-

Search engines can access and process PDF content: Google, Bing, and other search engines have come a long way in their ability to understand and index PDF content. They can extract text, images, and even metadata from PDFs, making the information potentially visible in search results.

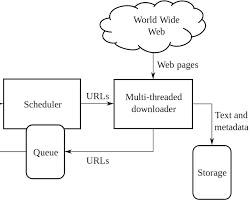

(Source: Google) architecture of web crawler

-

Challenges exist but are not impossible: PDFs can pose challenges for search engines. Complex layouts, embedded images without alt text, and lack of proper metadata can make it difficult for search engines to understand the content and context of the information.

-

Optimization strategies can help: Fortunately, there are several ways to optimize your PDFs for search engines:

- Use clear and concise titles and headings: Make sure your PDF titles and headings accurately reflect the content and include relevant keywords.

- Embed text, not just images: Use actual text for your content, not just scanned images, as search engines can’t read text within images.

- Add alt text to images: Provide descriptive alt text for any images within your PDF to help search engines understand their context.

- Include relevant keywords: Incorporate relevant keywords throughout your PDF content, but avoid keyword stuffing.

- Utilize proper metadata: Fill out the PDF’s metadata fields with accurate information like title, author, and description.

- Create a text summary: Consider providing a text summary of your PDF content on the same page where you host the PDF, making it easier for search engines to crawl and index the relevant information.

Expert Tips:

- Test your PDFs: Use tools like Google’s Structured Data Testing Tool to check how Google understands your PDF content and identify areas for improvement.

- Offer alternative formats: Provide your content in multiple formats, such as HTML or Word documents, alongside your PDF to increase accessibility and search engine visibility.

- Promote your PDFs: Share your optimized PDFs on social media, relevant websites, and online communities to increase their visibility and potential for organic search traffic.

Remember, while PDFs might not be inherently invisible to search engines, optimizing them for proper crawling and indexing can significantly improve their visibility and ranking potential. By following these expert tips, you can ensure your valuable PDF content reaches its target audience through search engines.

Myth 9: More SEO Plugins Equals Better Results

The statement “More SEO plugins equals better results” is a common misconception in the SEO world. While some plugins can be valuable tools, overreliance on them can hinder your SEO efforts.

Here’s why simply adding more plugins isn’t the answer:

- Plugin bloat can slow down your website: Too many plugins can create code conflicts and slow down your website’s loading speed, a crucial ranking factor for search engines.

- Not all plugins are created equal: Some offer limited functionality or outdated practices, potentially harming your SEO efforts instead of improving them.

- Plugins can’t replace solid SEO strategies: High-quality content, robust on-page optimization, and link building are fundamental for SEO success. Plugins can only complement these core strategies, not replace them.

However, some SEO plugins can be valuable when used strategically:

- Keyword research and optimization: Tools like Ahrefs or Semrush can help you research relevant keywords and optimize your content accordingly.

- Technical SEO audits: Plugins like Yoast SEO or Screaming Frog can identify technical website issues that could impact your ranking.

- Sitemaps and robots.txt management: Some plugins simplify creating and managing sitemaps and robots.txt files, which can improve crawlability and indexing.

Expert Tips:

- Focus on quality over quantity: Choose a few high-quality plugins that address specific needs and avoid installing plugins for functionalities you can easily manage manually.

- Regularly update and audit your plugins: Outdated plugins can pose security risks and compatibility issues. Keep your plugins updated and regularly audit their impact on your website’s performance.

- Don’t rely solely on plugins: SEO is a holistic approach. Use plugins as tools to support your core SEO strategies, not as a shortcut to success.

- Focus on user experience: Ultimately, the best SEO practices prioritize user experience. Choose plugins that enhance your website’s usability and functionality without compromising speed or performance.

Remember, SEO success isn’t about installing the most plugins. It’s about understanding your target audience, creating high-quality content, and optimizing your website for search engines and users. Using plugins strategically and focusing on the core principles of SEO, you can achieve sustainable organic traffic growth without relying on plugin overload.

Additional Tip: Before installing any plugin, research its functionality, user reviews, and potential impact on your website’s performance. Choose plugins compatible with your website platform and offer features that align with your specific SEO needs.

By following these expert tips and avoiding the “more plugins equals better results” trap, you can effectively leverage the power of SEO plugins and achieve optimal results for your website.

Technical SEO has no shortage of misguided conventional wisdom and outdated practices lingering as accepted standards. However, modern search engines have advanced beyond these oversimplified assumptions and ranking rule myths.

Stay on top of the latest verified algorithm insight reports, developer documentation revisions, searcher behavior shifts, and testing research to elevate your technical optimization efforts. Measure the performance lifts from technical upgrades using data vs. chasing assumptions.

While no one has fully unlocked every search ranking secret, continually evolving your technical SEO foundations based on proven facts over fiction will unlock immense organic growth potential.